I have wanted to try this for a while and I did try setting up an interface to the Hessenberg-triangular form from LAPACK with the following:

using LinearAlgebra

using LinearAlgebra: checksquare, libblastrampoline

using LinearAlgebra.LAPACK: BlasInt, @chkvalidparam, @blasfunc, chkstride1, chklapackerror

using Base: require_one_based_indexing

for (gghd3, elty) in

((:dgghd3_,:Float64),

(:sgghd3_,:Float32))

@eval begin

function gghd3!(jobq::AbstractChar, jobz::AbstractChar,

A::AbstractMatrix{$elty}, B::AbstractMatrix{$elty})

require_one_based_indexing(A, B)

@chkvalidparam 1 jobq ('N', 'I')

@chkvalidparam 2 jobz ('N', 'I')

chkstride1(A, B)

n, m = checksquare(A, B)

ilo = 1

ihi = n

if n != m

throw(DimensionMismatch(

lazy"dimensions of A, ($n,$n), and B, ($m,$m), must match"))

end

ldq = jobq == 'I' ? max(1, n) : 1

q = similar(A, $elty, ldq, n)

ldz = jobz == 'I' ? max(1, n) : 1

z = similar(A, $elty, ldz, n)

work = Vector{$elty}(undef, 1)

lwork = BlasInt(-1)

info = Ref{BlasInt}()

for i = 1:2 # first call returns lwork as work[1]

ccall((@blasfunc($gghd3), libblastrampoline), Cvoid,

(Ref{UInt8}, Ref{UInt8}, #compq, compz

Ref{BlasInt}, Ref{BlasInt}, Ref{BlasInt}, # n, ilo, ihi

Ptr{$elty}, Ref{BlasInt}, Ptr{$elty}, # A, lda, B

Ref{BlasInt}, Ptr{$elty}, Ref{BlasInt}, # ldb, Q, ldq

Ptr{$elty}, Ref{BlasInt}, Ptr{$elty}, # Z, ldz, work

Ref{BlasInt}, Ref{BlasInt}, # lwork, info

Clong, Clong, Clong),

jobq, jobz,

n, ilo, ihi, A, max(1,stride(A, 2)), B,

max(1,stride(B, 2)), q, ldq, z,

ldz, work, lwork, info, 1, 1, 1)

chklapackerror(info[])

if i == 1

lwork = BlasInt(real(work[1]))

resize!(work, lwork)

end

end

UpperHessenberg(A), UpperTriangular(B),

q[1:(jobq == 'I' ? n : 0),:], z[1:(jobz == 'I' ? n : 0),:]

end

end

end

function hess_triangular_form(A,B)

Q1, R = qr(B)

Ta, Tb, Q, Z = gghd3!('I', 'I', Q1'*A, R)

return Ta, Tb, Q1*Q, Z

end

This can be used to solve the system with something like

using LinearAlgebra

n=100

A = randn(n,n)

B = randn(n,n)

Ta, Tb, Q, Z = hess_triangular_form(A,B)

@show opnorm(A-Q*Ta*Z', Inf)

@show opnorm(B-Q*Tb*Z', Inf)

b = randn(n)

y = Q'*A'*copy(b)

λ = 7.0

ldiv!(UpperHessenberg(Ta + λ * Tb), y)

x = Z*y

@show norm((A + λ * B) * x - A'*b)

which gives output:

opnorm(A - Q * Ta * Z', Inf) = 2.580362139237291e-13

opnorm(B - Q * Tb * Z', Inf) = 2.2466750682070824e-13

norm((A + λ * B) * x - A' * b) = 7.710934030831613e-13

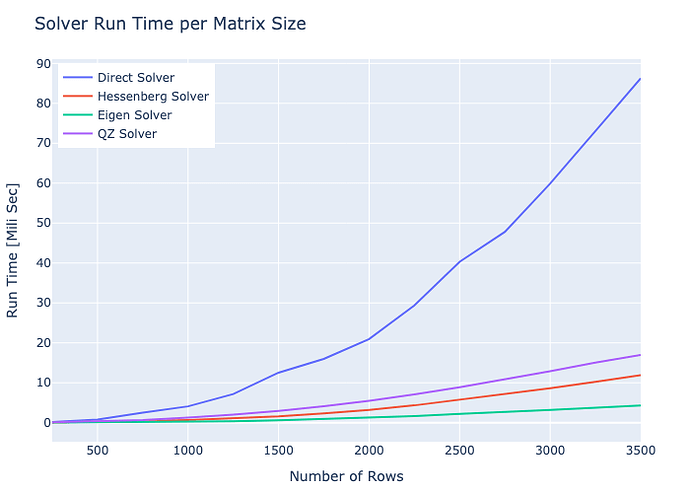

Solving should be O(n^2) once the Hessenberg-triangular form is computed with cost O(n^3). The built-in schur(A,B) in Julia goes a bit further than Hessenberg-triangular form and makes A quasi-triangular (with at most 2\times 2 blocks on the diagonal) and B triangular. It can be used in the same way, since it’s a more structured Hessenberg-triangular form. I thought the basic Hessenberg triangular form would be a substantial performance improvement over going to the full Schur form, but again with random A and B, for n=1000 I get

julia> @benchmark hess_triangular_form(A,B)

BenchmarkTools.Trial: 2 samples with 1 evaluation per sample.

Range (min … max): 3.845 s … 3.933 s ┊ GC (min … max): 0.04% … 0.00%

Time (median): 3.889 s ┊ GC (median): 0.02%

Time (mean ± σ): 3.889 s ± 62.658 ms ┊ GC (mean ± σ): 0.02% ± 0.03%

and

julia> @benchmark schur(A,B)

BenchmarkTools.Trial: 2 samples with 1 evaluation per sample.

Range (min … max): 4.608 s … 4.658 s ┊ GC (min … max): 0.00% … 0.02%

Time (median): 4.633 s ┊ GC (median): 0.01%

Time (mean ± σ): 4.633 s ± 35.495 ms ┊ GC (mean ± σ): 0.01% ± 0.01%

(Note in the above numbers, the computer I’m running this on is ancient.)

So it appears to be pretty marginal. Profiling suggests that hess_triangular_form is spending most of its time in the ccall, so I don’t think the allocations and the simple set-up of the problem is taking much time. I’m a bit surprised, but maybe the Hessenberg-triangular form is a larger percentage of the schur run time than I thought it was. I was expecting half or less.