1.In this code:

- I am trying to find a boundingbox for an element of mesh and create a RegularGrid of

dims = (10, 10, 10) on that box.

- I will then keep only those cuboids of this RegularGrid that are inside the element.

- I already have color values of element’s vertices. Then I will do interpolation of color values on these cuboid`s vertices and get volume plot.

using Meshes, CoordRefSystems ,GLMakie, LinearAlgebra, Unitful

r = Float32[1.6, 1.754088, 1.9230156, 2.1082115, 2.311243, 2.5338273, 2.7778475, 3.0453684, 3.3386526, 3.6601818, 4.012676, 4.3991165, 4.8227735, 5.287231, 5.7964177, 6.354642, 6.9666257, 7.637547, 8.373081]

θ = Float32[0.0, 0.09817477, 0.19634955, 0.2945243, 0.3926991]

ϕ = Float32[0.0, 0.3926991, 0.7853982, 1.1780972, 1.5707964, 1.9634954, 2.3561945, 2.7488935, 3.1415927, 3.5342917, 3.9269907, 4.3196898, 4.712389, 5.105088, 5.497787, 5.8904862, 6.2831855]

g = RectilinearGrid{𝔼,typeof(Spherical(0,0,0))}(r, θ, ϕ)

hxdrn = element(g,1)

spherpts = coords.(vertices(hxdrn))

cartpts = convert.(Cartesian, spherpts)

points = [Meshes.Point(Float64.(ustrip.((c.x, c.y, c.z)))...) for c in cartpts]

sel =Hexahedron(tuple(points...))

bb = Meshes.boundingbox(sel)

dbb = RegularGrid(bb.min, bb.max, dims = (10, 10, 10))

cuboids = []

for cl in nelements(dbb)

c = vertex(element(dbb,cl),1)

if c ∈ sel # keep cuboid if inside the element

push!(cuboids, cl)

end

end

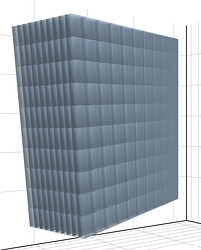

viz!(dbb, alpha=0.4, showsegments = true, pointsize =20, pointcolor =:Green)

viz!(sel)

But this code c∈sel is giving error.

ERROR: MethodError: no method matching ntuple(::Meshes.var"#761#762"{Meshes.Point{…}}, ::Quantity{Float64, 𝐋, Unitful.FreeUnits{…}})

The function ntuple exists, but no method is defined for this combination of argument types..

2.Another problem is that

using Meshes

h = Hexahedron((0,0,0),(1,0,0),(1,1,0),(0,1,0),(0,0,1),(1,0,1),(1,1,1),(0,1,1))

h2 = Hexahedron((0,0,0),(2,0,0),(2,2,0),(0,2,0),(0,0,2),(2,0,2),(2,2,2),(0,2,2))

h ⊆ h2

gives following error.

ERROR: MethodError: no method matching isconvex(::Hexahedron{CoordRefSystems.Cartesian3D{CoordRefSystems.NoDatum, Unitful.Quantity{…}}, 𝔼{3}})

The function isconvex exists, but no method is defined for this combination of argument types.

![]() for colors given at each vertices?

for colors given at each vertices?