A cleaner & simpler example of a 2-state/2-choice problem is the Uzawa-Lucas model.

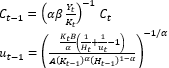

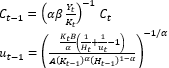

A special case (full depreciation & log utility) has a closed form solution:

The Euler equations if \gamma =0 (no externality)

Now in Julia

using Interpolations, Plots, LinearAlgebra, SpeedMapping

@inline approx(gr, val0) = LinearInterpolation(gr, val0, extrapolation_bc = Line())

#

α=.33; β=.97; #σ=1;δ=0;A=1.0;

F(K,H,u;α=α) = (K^α)*(u^(1-α))*(H^(1-α))

#

γ=0.0;

ū=((1-α)*(1-β))/(1-α+β*γ)

B= 1/(1.0 - ū) + .02

#B= 1/(1.0 - ū) - .02

#B= 1/(1.0 - ū)

#

S1 = [(.01 : .01 : 1.0);]

S2 = [(.01 : .01 : 1.0);] #S = [S1 S2] PolyBasis(S1, S2)

#

T1(s1,s2,c1,c2) = F(s1,s2,c2) -c1

T2(s1,s2,c1,c2; B=B) = B*(1-c2)*s2

#

c1_EE1(s1,s2,s1p,s2p,c1p,c2p; α=α,β=β) = c1p*((β*α*(F(s1p,s2p,c2p)/s1p))^(-1))

c1_min(s1,s2) = 0.00001 # c1 >= 0.0

c1_max(s1,s2) = F(s1,s2,1.0) # c1 <=

#

c2_EE2(s1,s2,s1p,s2p,c1p,c2p;B=B,α=α) = ((s1p*B/α)*(1/s2p +1/c2p -1)/((s1^α)*(s2^(1-α))))^(-1/α)

c2_min(s1,s2) = 0.0

c2_max(s1,s2) = 1.0

#

EE1(s1,s2,s1p,s2p,c1p,c2p) = clamp(c1_EE1(s1,s2,s1p,s2p,c1p,c2p), c1_min(s1,s2), c1_max(s1,s2))

EE2(s1,s2,s1p,s2p,c1p,c2p) = clamp(c2_EE2(s1,s2,s1p,s2p,c1p,c2p), c2_min(s1,s2), c2_max(s1,s2))

#

Π10 = [(1-α*β)*F(s1,s2,ū) for s1 in S1, s2 in S2] #

Π20 = [ū for s1 in S1, s2 in S2] #

#

function FPI_EE_map!(Π1p, Π2p, Π1, Π2, S1, S2)

#

Π1h = approx((S1, S2), Π1)

Π2h = approx((S1, S2), Π2)

S1p = [T1(S1[j], S2[k], Π1[j,k], Π2[j,k]) for j in eachindex(S1), k in eachindex(S2)]

S2p = [T2(S1[j], S2[k], Π1[j,k], Π2[j,k]) for j in eachindex(S1), k in eachindex(S2)]

C1p = [Π1h(S1p[j,k], S2p[j,k]) for j in eachindex(S1), k in eachindex(S2)]

C2p = [Π2h(S1p[j,k], S2p[j,k]) for j in eachindex(S1), k in eachindex(S2)]

Π1p .= [EE1(S1[j], S2[j], S1p[j,k], S2p[j,k], C1p[j,k], C2p[j,k]) for j in eachindex(S1), k in eachindex(S2)]

Π2p .= [EE2(S1[j], S2[j], S1p[j,k], S2p[j,k], C1p[j,k], C2p[j,k]) for j in eachindex(S1), k in eachindex(S2)]

end

#res = speedmapping(Π10,Π20; (m!)=(Π1p, Π2p, Π1, Π2) -> FPI_EE_map!(Π1p, Π2p, Π1, Π2, S1, S2)) #, tol=1e-10

@inline function FPI_EE(S1, S2, Π1, Π2; k_max=100, tol=0.001)

dist = 10.0; k=1;

Π1p = similar(Π1)

Π2p = similar(Π2)

@inbounds while(dist > tol && k<=k_max)

println(k)

#

Π1h = approx((S1, S2), Π1)

Π2h = approx((S1, S2), Π2)

S1p = [T1(S1[j], S2[k], Π1[j,k], Π2[j,k]) for j in eachindex(S1), k in eachindex(S2)]

S2p = [T2(S1[j], S2[k], Π1[j,k], Π2[j,k]) for j in eachindex(S1), k in eachindex(S2)]

C1p = [Π1h(S1p[j,k], S2p[j,k]) for j in eachindex(S1), k in eachindex(S2)]

C2p = [Π2h(S1p[j,k], S2p[j,k]) for j in eachindex(S1), k in eachindex(S2)]

Π1p .= [EE1(S1[j], S2[j], S1p[j,k], S2p[j,k], C1p[j,k], C2p[j,k]) for j in eachindex(S1), k in eachindex(S2)]

Π2p .= [EE2(S1[j], S2[j], S1p[j,k], S2p[j,k], C1p[j,k], C2p[j,k]) for j in eachindex(S1), k in eachindex(S2)]

#

dist = 0.5*norm(Π1 - Π1p) + 0.5*norm(Π2 - Π2p)

k += 1

Π1 = Π1p

Π2 = Π2p

end

return Π1, Π2 #, va

end

@time Pol1_EE, Pol2_EE = FPI_EE(S1, S2, Π10, Π20; k_max=600, tol=0.000001)

Strangely, plugging in the closed form solution as my guess doesn’t seem to converge…