There has been a while that I sense I might have some misconceptions about dynamic dispatch, and maybe this use case represents my doubts well..

So.. find myself in the unpleasant situation of having to use abstract containers.

Consider the following MWE:

abstract type MyAbstract end

struct Beta <: MyAbstract end

struct Alpha{T<:MyAbstract} <: MyAbstract

a::Int

allcompanion::Vector{T}

end

function dosomething(alpha)

companion = first(alpha.allcompanion)

return dosomethingelse(alpha, companion)

end

function dosomethingelse(alpha::Alpha, companion::Alpha)

return alpha.a + companionjob(companion)

end

function companionjob(companion::Alpha)

return companion.a

end

function companionjob(companion::Beta)

return 1

end

alphabeta = Alpha(1, [Beta(), Beta(), Beta()])

alphaalpha1 = Alpha(1, Alpha[])

alphaalpha2 = Alpha(2, Alpha[])

alphaalpha3 = Alpha(3, Alpha[])

push!(alphaalpha1.allcompanion, alphaalpha2, alphaalpha3)

push!(alphaalpha2.allcompanion, alphaalpha1, alphaalpha3)

push!(alphaalpha3.allcompanion, alphaalpha1, alphaalpha2)

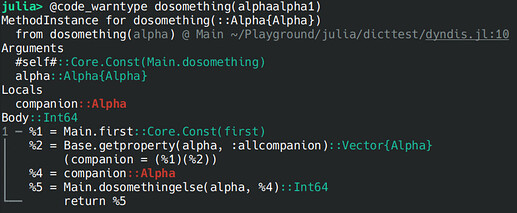

Running @code_warntype dosomething(alphaalpha1) obviously complains

Unfortunately, dosomething is in a hot loop, and profiling yields much dynamic dispatch happening there..

I have a couple of questions:

-

I think it’s crystal clear for a human processing what should happen in

dosometing(::Alpha{Alpha}), It’s clear thatcompanionjob(::Alpha)will be executed, and since we don’t ask for theallcompanionabstract field of thecompanion, no surprises are expected.. Do you agree? If yes, why does the compiler have problems then? -

Does the compiler really have problems, and dynamic dispatch really happens here such that there is a performance hit, or could that be a false positive, since, as I said in 1., all generated code should be predicted?

-

What can I do to lift any performance issues with dynamic dispatch ? I am willing to go all the way for internals and hacks..