Hello,

I am using JuMP for solving optimal control of a car on a track. I am trying to reformulate my problem, to improve the sparsity pattern of jacobian of constraints and Hessian of Lagrangian. I have managed to get the Hessian to nice form. For the query of Hessian and Lagrangian I am using this Computing Hessians · JuMP This is the Hessian:

julia> UnicodePlots.spy(H_star)

┌──────────────────────────────┐

1 │⣿⡻⢿⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ > 0

│⠛⠓⢰⣶⣶⣶⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│ < 0

│⠀⠀⢸⣿⣞⢝⣀⣀⣀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│

│⠀⠀⠀⠀⠀⢸⣿⣻⢿⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│

│⠀⠀⠀⠀⠀⠘⠛⠓⢱⣶⣶⣶⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│

│⠀⠀⠀⠀⠀⠀⠀⠀⢸⣿⣞⢝⣀⣀⣀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│

│⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⢸⣿⣻⢿⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│

│⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠘⠛⠓⢱⣶⡶⡆⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀│

│⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠸⠯⠾⢇⣀⣀⡀⠀⠀⠀⠀⠀⠀⠀⠀⠀│

│⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⢸⡿⣫⡇⠀⠀⠀⠀⠀⠀⠀⠀⠀│

│⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠉⠉⢱⣶⡶⡆⠀⠀⠀⠀⠀⠀│

│⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠸⠯⠾⢇⣀⣀⡀⠀⠀⠀│

│⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⢸⡿⣫⡇⠀⠀⠀│

│⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠈⠉⠉⢱⣶⡶⡆│

90 │⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠸⠯⠾⠇│

└──────────────────────────────┘

⠀1⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀90⠀

⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀544 ≠ 0⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀

But I am having trouble doing the same with jacobian. No matter how I reorder the constraints and variables, there are always multiple bands:

julia> UnicodePlots.spy(jacobian)

┌───────────┐

1 │⠛⠿⣷⣆⡀⠀⠀⠀⠀⠀⠀│ > 0

│⠀⠀⠉⠛⣿⣧⣄⡀⠀⠀⠀│ < 0

│⠀⠀⠀⠀⠀⠉⠹⢷⣶⣤⠀│

│⠀⠆⠦⣄⣀⡀⠀⠀⠘⠛⠋│

│⠀⣤⡄⠀⠀⠉⠉⠑⠐⠲⠤│

│⠀⠉⠛⠷⣦⣄⡀⠀⠀⠀⠀│

│⠀⠀⠀⠀⠀⠉⠙⠳⢶⣤⣀│

│⣣⠰⢂⠀⠀⠀⠀⠀⠀⠈⠉│

│⠀⠀⠈⠱⢂⠄⡀⠀⠀⠀⠀│

│⠀⠀⠀⠀⠀⠈⠁⠃⡤⣀⠀│

│⠄⠄⠄⠄⡀⡀⢀⢀⠀⠉⠚│

│⠈⢘⡐⠐⠐⠀⠆⠄⠌⠌⠄│

│⠊⠈⠰⢦⡀⠀⠀⠀⠀⠀⠀│

│⠀⠀⠀⠀⠐⠡⠂⡄⡀⠀⠀│

236 │⠀⠀⠀⠀⠀⠀⠀⠀⠉⠖⡠│

└───────────┘

⠀1⠀⠀⠀⠀⠀⠀⠀⠀90⠀

⠀⠀⠀968 ≠ 0⠀⠀⠀

julia> list_of_constraint_types(model)

6-element Vector{Tuple{Type, Type}}:

(NonlinearExpr, MathOptInterface.EqualTo{Float64})

(NonlinearExpr, MathOptInterface.GreaterThan{Float64})

(NonlinearExpr, MathOptInterface.LessThan{Float64})

(AffExpr, MathOptInterface.EqualTo{Float64})

(AffExpr, MathOptInterface.GreaterThan{Float64})

(AffExpr, MathOptInterface.LessThan{Float64})

I am beginning to think, it has to do something with the constraints being different types.

Does it make sense to try to force them to be banded diagonal, or are they represented differently in memory and this is just a visualisation which has no impact? Could you please link me resources where this is explained?

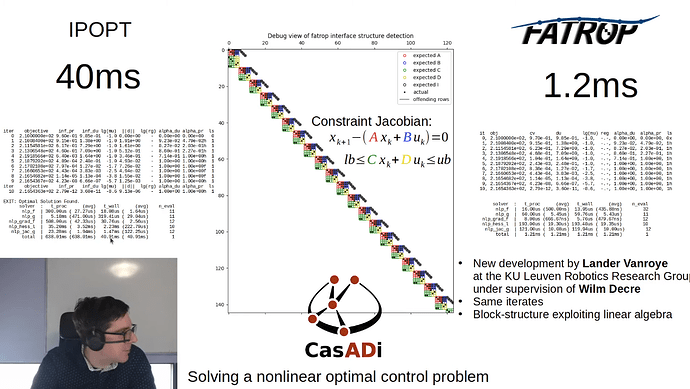

My goal is to try to get it looking similar to this video https://youtu.be/X-nS4i8jJyM?si=18TSLoxiMOP1IcBl&t=104

Code which queries jacobian

using JuMP

import Ipopt

import LinearAlgebra

import Random

import SparseArrays

import MathOptInterface as MOI

function compute_optimal_jacobian(model::Model)

rows = Any[]

nlp = MOI.Nonlinear.Model()

for (F, S) in list_of_constraint_types(model)

for ci in all_constraints(model, F, S)

if !(F <: VariableRef)

push!(rows, ci)

object = constraint_object(ci)

MOI.Nonlinear.add_constraint(nlp, object.func, object.set)

end

end

end

MOI.Nonlinear.set_objective(nlp, objective_function(model))

x = all_variables(model)

backend = MOI.Nonlinear.SparseReverseMode()

evaluator = MOI.Nonlinear.Evaluator(nlp, backend, index.(x))

# Initialize the Jacobian

MOI.initialize(evaluator, [:Jac])

# Query the Jacobian structure

sparsity = MOI.jacobian_structure(evaluator)

I, J, V = first.(sparsity), last.(sparsity), zeros(length(sparsity))

# Query the Jacobian values

MOI.eval_constraint_jacobian(evaluator, V, value.(x))

return SparseArrays.sparse(I, J, V, length(rows), length(x))

end

jacobian = compute_optimal_jacobian(model)

GLMakie.spy(reverse(jacobian,dims=1))

UnicodePlots.spy(jacobian)