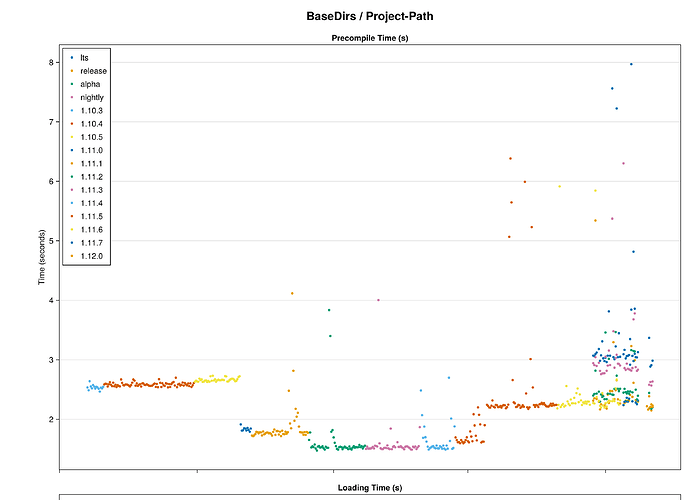

package_name,task_name,date,julia_version,hostname,hash,precompile_time,loading_time,task_time,precompile_cpu,task_cpu,precompile_resident,task_resident

BaseDirs,Project-Path,2024-05-26,1.10.3,jeb-kr-amherst,8399c9b,2.534864,0.023005962371826172,0.004840850830078125,147.0,294.0,358416,247344

BaseDirs,Project-Path,2024-05-27,1.10.3,jeb-kr-amherst,8399c9b,2.486408,0.01497197151184082,0.0037360191345214844,145.0,257.0,358780,247220

BaseDirs,Project-Path,2024-05-28,1.10.3,jeb-kr-amherst,8399c9b,2.639432,0.014880895614624023,0.0037300586700439453,152.0,258.0,358172,247476

BaseDirs,Project-Path,2024-05-29,1.10.3,jeb-kr-amherst,8399c9b,2.551279,0.014980077743530273,0.003719806671142578,142.0,254.0,358464,247124

BaseDirs,Project-Path,2024-05-30,1.10.3,jeb-kr-amherst,8399c9b,2.519,0.01501011848449707,0.0037288665771484375,142.0,256.0,358892,247216

BaseDirs,Project-Path,2024-05-31,1.10.3,jeb-kr-amherst,8399c9b,2.574519,0.014948844909667969,0.0037670135498046875,142.0,256.0,358332,247476

BaseDirs,Project-Path,2024-06-01,1.10.3,jeb-kr-amherst,8399c9b,2.548266,0.014998197555541992,0.0037708282470703125,143.0,256.0,358696,247476

BaseDirs,Project-Path,2024-06-02,1.10.3,jeb-kr-amherst,8399c9b,2.514172,0.014941930770874023,0.0037260055541992188,144.0,257.0,358636,247004

BaseDirs,Project-Path,2024-06-03,1.10.3,jeb-kr-amherst,8399c9b,2.544201,0.014866113662719727,0.003720998764038086,147.0,256.0,390208,247232

BaseDirs,Project-Path,2024-06-04,1.10.3,jeb-kr-amherst,8399c9b,2.534858,0.015110015869140625,0.003737926483154297,142.0,257.0,358448,247468

BaseDirs,Project-Path,2024-06-05,1.10.3,jeb-kr-amherst,8399c9b,2.509259,0.014950990676879883,0.003728151321411133,147.0,256.0,359120,247472

BaseDirs,Project-Path,2024-06-06,1.10.3,jeb-kr-amherst,8399c9b,2.465619,0.015504121780395508,0.003756999969482422,148.0,258.0,358996,247476

BaseDirs,Project-Path,2024-06-07,1.10.3,jeb-kr-amherst,8399c9b,2.531882,0.014864921569824219,0.003782987594604492,147.0,254.0,381048,247352

BaseDirs,Project-Path,2024-06-08,1.10.3,jeb-kr-amherst,8399c9b,2.516802,0.01512002944946289,0.0037391185760498047,147.0,254.0,358844,247348

BaseDirs,Project-Path,2024-06-09,1.10.3,jeb-kr-amherst,8399c9b,2.543521,0.015519142150878906,0.0037348270416259766,142.0,259.0,359064,247352

BaseDirs,Project-Path,2024-06-10,1.10.4,jeb-kr-amherst,8399c9b,2.584906,0.01497507095336914,0.0037539005279541016,142.0,256.0,360256,247100

BaseDirs,Project-Path,2024-06-11,1.10.4,jeb-kr-amherst,8399c9b,2.599018,0.015102863311767578,0.003651142120361328,144.0,257.0,357544,246980

BaseDirs,Project-Path,2024-06-12,1.10.4,jeb-kr-amherst,8399c9b,2.593859,0.015147924423217773,0.003731966018676758,141.0,256.0,357892,247236

BaseDirs,Project-Path,2024-06-13,1.10.4,jeb-kr-amherst,8399c9b,2.549685,0.01514291763305664,0.0037279129028320312,148.0,256.0,357484,246972

This file has been truncated. show original