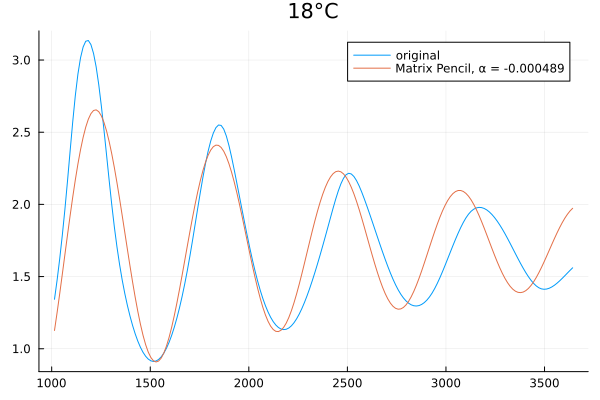

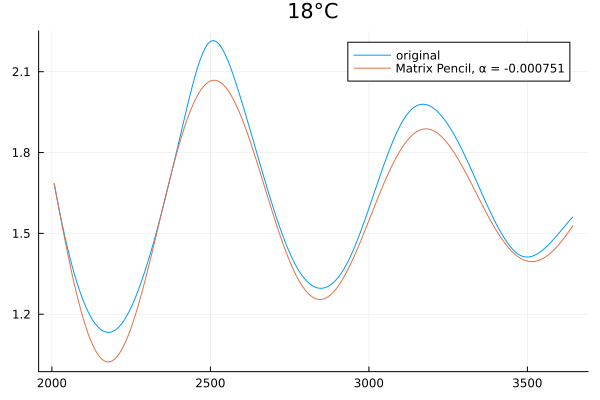

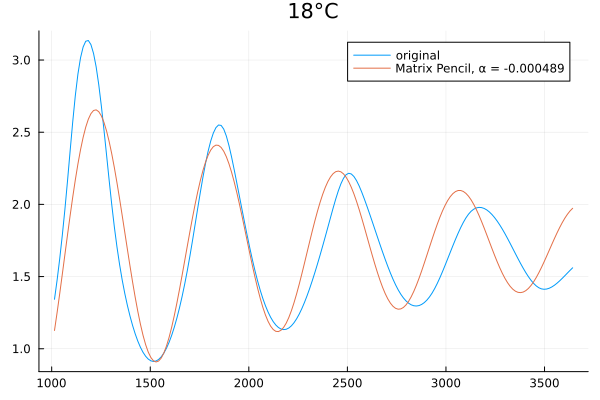

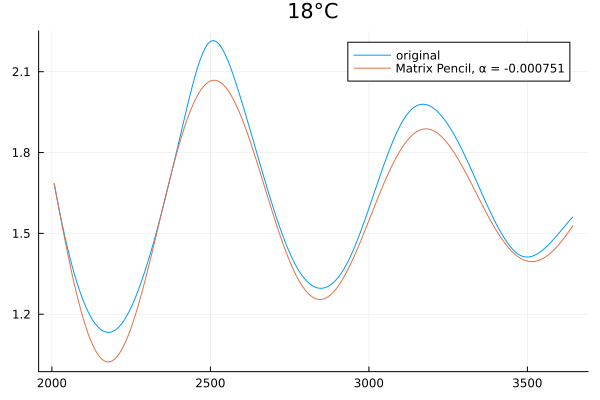

For my own edification, I decided to try to implement what I suggested. I used the matrix pencil algorithm from this paper, which is said to be superior to Prony’s method, after translating the code from Matlab to Julia. I tried fitting a pair (p=2) of complex exponential functions (because the original data is real) to the “tail” of the time series digitized from the first plot in the OP. The minimum time (abscissa) chosen to begin the “tail” was set to 500, 1500, 2000, 2500, and 3000. The resulting quality of fits and computed attenuation constants are shown below.

It appears from the last several plots that the attenuation constant is approximately 0.001 neper/sec (assuming the original time unit was seconds). But it’s possible that the system hasn’t yet approached its ultimate decay rate. Since it’s difficult to know a priori how far out in time to solve the system, it’s quite clear to me that the algorithm suggested by @stevengj is definitely the better way to approach this problem. (No surprise there!  )

)

In case people are interested, here is the listing for the matrix pencil algorithm:

using LinearAlgebra: eigvals, pinv

using ToeplitzMatrices: Hankel

"""

matrix_pencil(x::AbstractVector, p::Int, Δt::Real) -> (amp, α, f, θ)

Matrix pencil algorithm for fitting a time series with a sum of complex exponentials:

`` x(t) ≈ \\sum_{k=1}^{p} amp[k] * exp((2π*f[k]*im + α[k])*t + im*θ[k]) ``

# Input Arguments

- `x`: Time series sampled at a constant increment.

- `p`: Number of damped exponentials to be identified. For a real input `x`, `p` should be even.

- `Δt`: The time increment between samples.

# Return Values

- `amp`: Vector of amplitudes of length `p`.

- `α`: Vector of attenuation constants (units are nepers times the inverse of those of `Δt`).

- `f`: Vector of frequencies (units are the inverse of those of `Δt`).

- `θ`: Vector of phase constants (radians).

# Reference

This function is a translation to Julia by Peter S. Simon of the Matlab code

provided in the Open Access article Fernández Rodríguez, A., de Santiago Rodrigo,

L., López Guillén, E. et al., "Coding Prony’s method in MATLAB and applying it

to biomedical signal filtering", BMC Bioinformatics 19, 451 (2018),

https://doi.org/10.1186/s12859-018-2473-y.

The article is distributed under the terms of the Creative Commons Attribution 4.0

International License (http://creativecommons.org/licenses/by/4.0/), which permits

unrestricted use, distribution, and reproduction in any medium, provided you give

appropriate credit to the original author(s) and the source, provide a link to the

Creative Commons license, and indicate if changes were made. No additional restrictions

are placed on the Julia code by its author.

"""

function matrix_pencil(x::AbstractVector, p::Int, Δt::Real)

p > 0 || error("p must be a positive integer")

N = length(x)

Y = Hankel(x[1:N-p], x[N-p:N])

Y1 = Y[:, 1:end-1]

Y2 = @view Y[:, 2:end]

l = eigvals(pinv(Y1) * Y2)

α = log.(abs.(l)) ./ Δt

f = angle.(l) ./ (2π * Δt)

Z = zeros(Complex{real(eltype(x))}, N, p)

for i in eachindex(l)

factor = one(eltype(l))

for k in 1:N

Z[k, i] = factor

factor *= l[i]

end

end

for i in eachindex(Z)

zr, zi = real(Z[i]), imag(Z[i])

isinf(zr) && (zr = typemax(zr) * sign(zr))

isinf(zi) && (zi = typemax(zi) * sign(zi))

Z[i] = complex(zr, zi)

end

h = Z \ x

amp = abs.(h)

θ = angle.(h)

return (amp, α, f, θ)

end