We have released MadIPM, a GPU-accelerated interior-point solver for large-scale linear programming. ![]()

This work is done in collaboration with @sshin23 and @frapac.

MadIPM is built on top of MadNLP. It implements the Mehrotra predictor-corrector method.

Compared to other interior-point solvers, MadIPM is designed to run on the GPU. The linear systems arising in the interior-point method are solved with the linear solver NVIDIA cuDSS.

MadIPM also reuses the high-performance GPU kernels developed in MadNLPGPU using KernelAbstractions.jl. These include routines for assembling KKT systems, performing line search, and other critical operations in optimization solvers, along with new GPU kernels tailored specifically for the LP case.

If you want to give it a try, the code is available on github.

Solving a LP on the GPU with JuMP simply amounts to:

using JuMP

using MadIPM

using CUDA, KernelAbstractions, MadNLPGPU

c = rand(10)

model = Model(MadIPM.Optimizer)

set_optimizer_attribute(model, "array_type", CuVector{Float64})

set_optimizer_attribute(model, "linear_solver", MadNLPGPU.CUDSSSolver)

@variable(model, 0 <= x[1:10], start=0.5)

@constraint(model, sum(x) == 1.0)

@objective(model, Min, c' * x)

JuMP.optimize!(model)

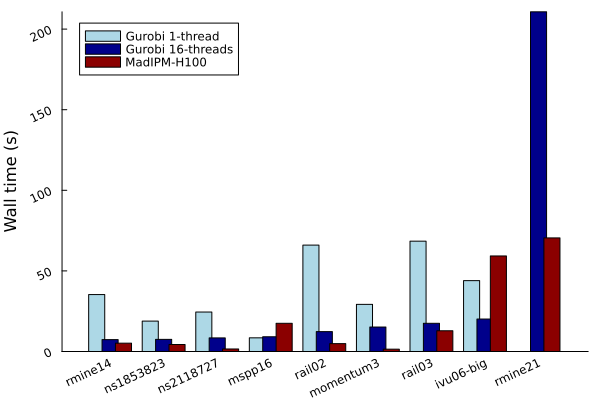

While performance depends on the problem, early benchmarks indicate that MadIPM can be competitive with Gurobi on large-scale LPs when run on a GPU.

Our paper, GPU Implementation of Second-Order Linear and Nonlinear Programming Solvers, was just accepted for presentation at the ScaleOPT workshop at NeurIPS 2025! It contains more details for those interested in the technical aspects.

I will also be giving a short talk about it earlier at JuMP-dev 2025 in New Zealand ![]() .

.