Hi everyone ![]() ,

,

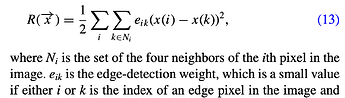

I’m trying to solve an optimization problem using Optimization.jl, but I’m struggling with incorporating a gradient term \nabla in the process. My cost function to minimize is:

where r^{A} and r^{B} involve log-sum-exponential terms:

Y^{i}, T^{1} and T^{2} are matrix of size n x m

I first tried without the gradient term \nabla to solve the problem “pixel by pixel” (basically with 2 nested for loop), and it works. I then tried to vectorize my residual function r over the whole matrix, but then the optimization is extremely slow and doesn’t work anymore.

function f(K, v₁, v₂, T₁, T₂) # the theoretical value, compared to $Y^{i}$ the measurments

x = sum(K[i] * exp.(-v₁[k] * T₁ - v₂[k] * T₂) for k in axes(K)[1])

return -log.(x / length(axes(K)[1]))

end

function r(T, p)

K, v₁, v₂, matrix_A, matrix_B, s = p

T₁, T₂ = view(T, :, 1:s), view(T, :, (s+1):size(T, 2)) # I concatenated T₁, T₂ to be able to use Optimization.jl

sum((f(K.A, v₁, v₂, T₁, T₂) .- matrix_A).^2 .+

(f(K.B, v₁, v₂, T₁, T₂) .- matrix_B).^2)

end

I think (?) vectorizing like that this is the only way to integrate the gradient term \nabla in the optimization, since the “pixel by pixel” approach does not give access to neighboring values in the matrix.

I don’t know if all this is clear ( I tried haha ![]() ), but does anyone have experience with this kind of problem or any relevant example?

), but does anyone have experience with this kind of problem or any relevant example?

Thanks in advance!

fdekerm