Announcing SymbolicApproximators.jl, a Julia package implementing Symbolic Aggregate approXimation (SAX) and related methods for symbolic discretization and dimension reduction. These techniques allow you to take continuous-valued sequences (including but not necessarily limited to time series) and then use things like string algorithms and machine learning algorithms for categorical data.

(Note: it has a couple hours left before registration completes so you will not be able to install it just yet from the General Registry.)

So far I’ve only implemented a few algorithms. More will follow soon. I’ve aimed for this to be the fastest implementation of SAX and SAX-family algorithms available, and some informal tests so far have shown that it may be. Basic usage of SAX seems to be about 8x faster than in R’s jmotif package, and > 3000x faster than Python’s saxpy.

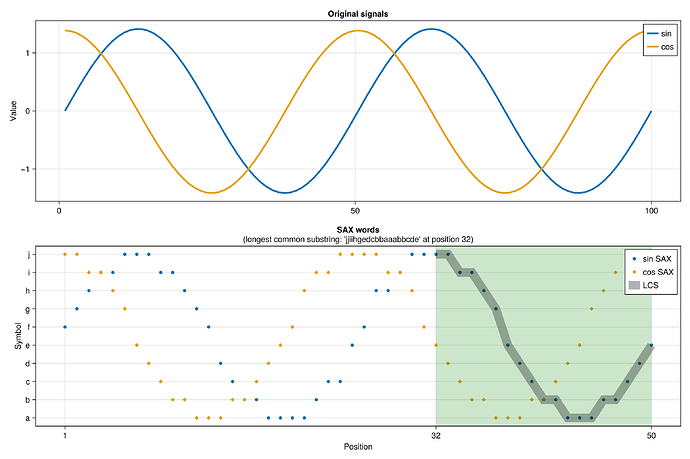

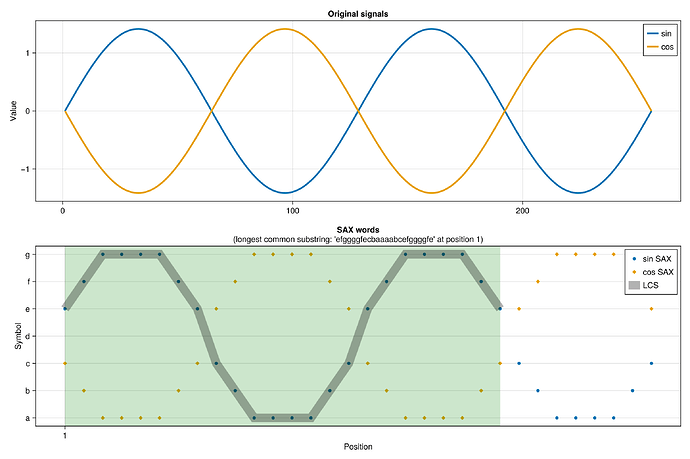

Here’s a quick demo. (See here for the full code.) If we have two continuous signals, let’s say a sine wave and a cosine wave, we can use this package to:

- convert them to reduced-size discrete representations

- specifically here we’ll use the symbols

athroughjand a “word” size of 50

- specifically here we’ll use the symbols

- we’ll pass those time series into a suffix automaton structure, with which we can compute the longest common substring (

lcs) between the reduced time series

using SymbolicApproximators

using SuffixAutomata

using StatsBase

using GLMakie

signal1 = (sin.(range(0, 4π, length=100)) |> x -> (x .- mean(x)) ./ std(x))

signal2 = (cos.(range(0, 4π, length=100)) |> x -> (x .- mean(x)) ./ std(x))

sax = SAX(50, 10) # we'll reduce with SAX to a "word" size of 50, alphabet size of 10

word1 = encode(sax, signal1)

word2 = encode(sax, signal2)

string1 = join(values(word1))

string2 = join(values(word2))

# find the longest common substring

automaton = SuffixAutomaton(string2)

pattern, position = lcs(string1, automaton)

You can also use these methods to calculate distance between approximated time series. Another demo here shows that the SAX distance approaches the true Euclidean distance of the original time series, as you increase the parameters word size and alphabet size.