I recently registered a new package I’ve been working on: PrecisionCarriers.jl. It’s designed to let you check existing code very quickly and easily for loss of precision in its calculations.

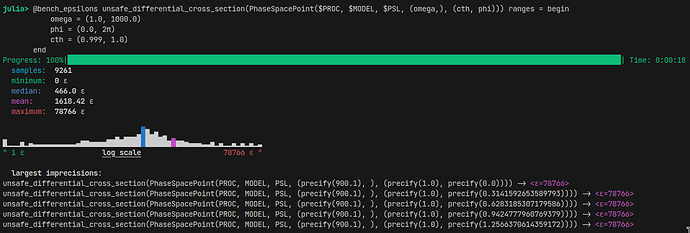

I added a macro inspired by BenchmarkTools.jl 's @benchmark macro, called @bench_epsilons. This lets you write a function call, interpolate values from your current scope into it, and define search ranges for variables that are then searched. The output gives a histogram of the observed precision loss, a printout of the number of “infinite” precision losses, if any, and a list of the worst observed precision losses, together with the function calls to reproduce them. Here’s an example of what it currently looks like in a particle physics application:

Internally, this works by wrapping the inputs in a PrecisionCarrier object, which does every computation with both the given value and type, and a BigFloat version of it. At the end of the calculation, the values can be compared, and an estimated amount of machine epsilons of error can be estimated (or the number of remaining significant digits in the result).

Precision is a difficult thing to get right or have a feeling for, and I think it’s helpful to have a good guess about how precise the results of some calculations actually are, especially in scientific code. Contrary to popular belief, there are many more possible ways to introduce precision loss than just catastrophic cancellation or very long chains of arithmetic.

There are a lot of things that can still be improved here, and I have a few ideas in mind already, but I’d also be interested in what others think. Maybe some people want to give it a try and have feedback.

A few packages that I found are similar, but don’t seem intended to actually detect precision lossy code:

- FloatTracker.jl specifically finds NaN and Inf values

- DoubleFloats.jl, MultiFloats.jl, ArbFloats.jl, etc. provide floating point types with more precision

- ValidatedNumerics.jl provides a way to have known error bounds on calculations, which is probably the closest to what I wanted to achieve with my package